The artificial intelligence landscape is witnessing unprecedented advancements, and Moonshot AI’s Kimi K2 Thinking stands at the forefront. Released in 2025, this open-source Mixture-of-Experts (MoE) large language model (LLM) boasts 32 billion activated parameters and a staggering 1 trillion total parameters. Backed by Alibaba and developed by a team of just 200, Kimi K2 Thinking is engineered for superior agentic capabilities, pushing the boundaries of AI reasoning, tool use, and autonomous problem-solving. With its innovative training techniques and impressive benchmark results, it challenges proprietary giants like OpenAI’s GPT series and Anthropic’s Claude models.

Origins and Development: From Startup to AI Powerhouse

Moonshot AI, established in 2023, has quickly become a leader in LLM development, focusing on agentic intelligence—AI’s ability to perceive, plan, reason, and act in dynamic environments. Kimi K2 Thinking evolves from the K2 series, incorporating breakthroughs in pre-training and post-training to address data scarcity and enhance token efficiency. Trained on 15.5 trillion high-quality tokens at a cost of about $4.6 million, the model leverages the novel MuonClip optimizer to achieve zero loss spikes during pre-training, ensuring stable and efficient scaling.

The development emphasizes token efficiency as a key scaling factor, given the limited supply of high-quality data. Techniques like synthetic data rephrasing in knowledge and math domains amplify learning signals without overfitting, while the model’s architecture—derived from DeepSeek-V3—optimizes sparsity for better performance under fixed compute budgets.

Architectural Innovations: MoE at Trillion-Parameter Scale

Kimi K2 Thinking’s MoE architecture features 1.04 trillion total parameters with only 32 billion activated per inference, reducing computational demands while maintaining high performance. It uses Multi-head Latent Attention (MLA) with 64 heads—half of DeepSeek-V3’s—to minimize inference overhead for long-context tasks. Scaling law analyses guided the choice of 384 experts with a sparsity of 48, balancing performance gains with infrastructure complexity.

The MuonClip optimizer integrates Muon’s token efficiency with QK-Clip to prevent attention logit explosions, enabling smooth training without spikes. This stability is crucial for agentic applications requiring sustained reasoning over hundreds of steps.

Key Features: Agentic Excellence and Beyond

Kimi K2 Thinking excels in interleaving chain-of-thought reasoning with up to 300 sequential tool calls, maintaining coherence in complex workflows. Its features include:

- Agentic Autonomy: Simulates intelligent agents for multi-step planning, tool orchestration, and error correction.

- Extended Context: Supports up to 2 million tokens, ideal for long-horizon tasks like code analysis or research simulations.

- Multilingual Coding: Handles Python, C++, Java, and more with high accuracy, often one-shotting challenges that stump competitors.

- Reinforcement Learning Integration: Uses verifiable rewards and self-critique for alignment in math, coding, and open-ended domains.

- Open-Source Accessibility: Available on Hugging Face, with quantized versions for consumer hardware.

Community reports highlight its “insane” reliability, with fewer hallucinations and errors in practical use, such as Unity tutorials or Minecraft simulations.

Benchmark Supremacy: Outperforming the Competition

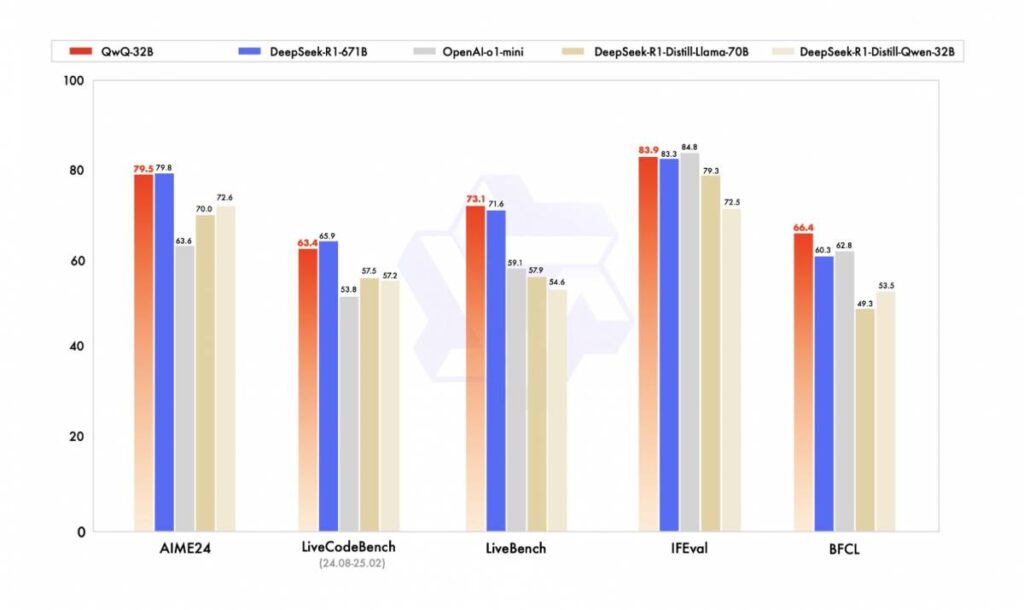

Kimi K2 Thinking dominates non-thinking benchmarks, outperforming open-source rivals and rivaling closed models:

- Coding: 65.8% on SWE-Bench Verified (agentic single-attempt), 47.3% on Multilingual, 53.7% on LiveCodeBench v6.

- Tool Use: 66.1% on Tau2-Bench, 76.5% on ACEBench (English).

- Math & STEM: 49.5% on AIME 2025, 75.1% on GPQA-Diamond, 89.0% on ZebraLogic.

- General: 89.5% on MMLU, 89.8% on IFEval, 54.1% on Multi-Challenge.

- Long-Context & Factuality: 93.5% on DROP, 88.5% on FACTS Grounding (adjusted).

On LMSYS Arena (July 2025), it ranks as the top open-source model with a 54.5% win rate on hard prompts. Users praise its tool use, rivaling Claude at 80% lower cost.

Post-Training Mastery: SFT and RL for Agentic Alignment

Post-training transforms Kimi K2’s priors into actionable behaviors via supervised fine-tuning (SFT) and reinforcement learning (RL). A hybrid data synthesis pipeline generates millions of tool-use trajectories, blending simulations with real sandboxes for authenticity. RL uses verifiable rewards for math/coding and self-critique rubrics for subjective tasks, enhancing helpfulness and safety.

Availability and Integration: Empowering Developers

Hosted on Hugging Face (moonshotai/Kimi-K2-Thinking) and GitHub, Kimi K2 is accessible via APIs on OpenRouter and Novita.ai. Pricing starts at $0.15/million input tokens. 4-bit and 1-bit quantizations enable runs on 24GB GPUs, with community fine-tunes emerging for reasoning enhancements.

Comparative Edge: Why Kimi K2 Stands Out

Versus GPT-4o: Superior in agentic tasks at lower cost. Versus Claude 3.5 Sonnet: Matches in coding, excels in math. As open-source, it democratizes frontier AI, fostering innovation without subscriptions.

Future Horizons: Challenges and Potential

Kimi K2 signals China’s AI ascent, emphasizing ethical, efficient practices. Challenges include speed optimization and hallucination reduction, with updates planned. Its impact spans healthcare, finance, and education, heralding an era of accessible agentic AI.

Wrap Up

Kimi K2 Thinking redefines open-source AI with trillion-scale power and agentic focus. Its benchmarks, efficiency, and community-driven evolution make it indispensable for developers and researchers. As AI evolves, Kimi K2 paves the way for intelligent, autonomous systems.