Want to create your own AI agent that can think, reason, and take action? OpenAI’s new Agent Builder and Agents SDK make it easier than ever to build autonomous AI systems that can use tools, connect to APIs, and even delegate tasks to other agents.

This guide walks you through everything you need to know — from setup and tool creation to multi-agent orchestration and guardrails — using OpenAI’s latest developer features.

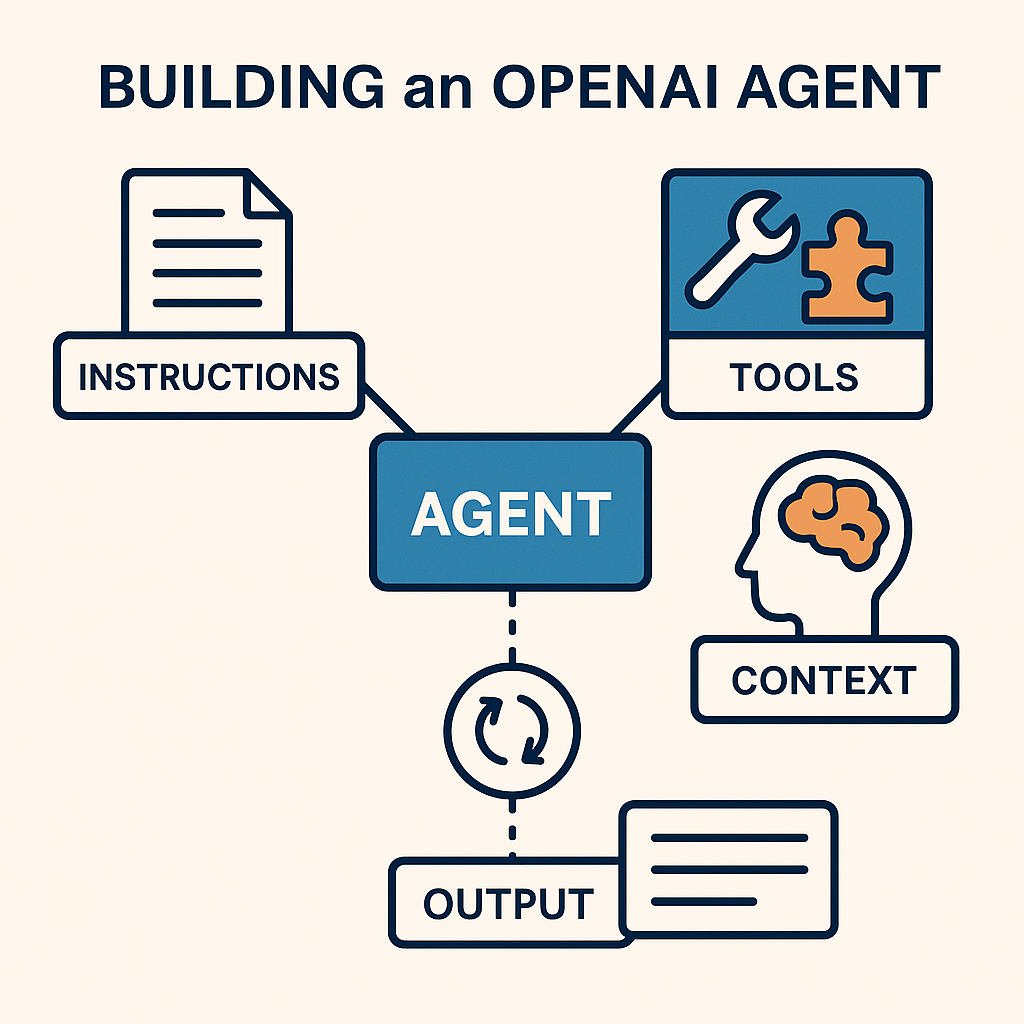

What Is an OpenAI Agent?

An agent in OpenAI’s platform is an intelligent system that:

- Follows a specific instruction set (system prompt or developer message)

- Has access to tools (custom functions, APIs, or built-in modules)

- Can maintain state or memory across interactions

- Supports multi-step reasoning and orchestration between multiple agents

- Implements guardrails and tracing for safety and observability

The Agent Builder ecosystem combines the Agent Builder, Responses API, and Agents SDK to let you develop, debug, and deploy AI agents that perform real work.

1. Choose Your Build Layer

You can build agents in two ways:

| Approach | Pros | Trade-offs |

|---|---|---|

| Responses API | More control; full tool orchestration | Requires managing the agent loop manually |

| Agents SDK | Handles orchestration, tool calling, guardrails, and tracing | Less low-level control, but faster to build with |

OpenAI recommends using the Agents SDK for most use cases.

2. Install Required Libraries

TypeScript / JavaScript

npm install @openai/agents zod@3import { Agent, run, tool } from "@openai/agents";

import { z } from "zod";Python

from agents import Agent, function_tool, Runner

from pydantic import BaseModel3. Define Your Agent

An agent consists of:

- name: readable identifier

- instructions: the system’s behavioral prompt

- model: which GPT model to use

- tools: external functions or APIs

- optional: structured outputs, guardrails, and sub-agents

Example (TypeScript)

const getWeather = tool({

name: "get_weather",

description: "Return the weather for a given city",

parameters: z.object({ city: z.string() }),

async execute({ city }) {

return `The weather in ${city} is sunny.`;

},

});

const agent = new Agent({

name: "Weather Assistant",

instructions: "You are a helpful assistant that can fetch weather.",

model: "gpt-4.1",

tools: [getWeather],

});Example (Python)

@function_tool

def get_weather(city: str) -> str:

return f"The weather in {city} is sunny"

agent = Agent(

name = "Haiku agent",

instructions = "Always respond in haiku form",

model = "gpt-5-nano",

tools = [get_weather]

)4. Add Context or Memory

Agents can store contextual data to make responses more personalized or persistent.

interface MyContext {

uid: string;

isProUser: boolean;

fetchHistory(): Promise<string[]>;

}

const result = await run(agent, "What’s my next meeting?", {

context: {

uid: "user123",

isProUser: true,

fetchHistory: async () => [/* history */],

},

});5. Run and Orchestrate

import { run } from "@openai/agents";

const result = await run(agent, "What is the weather in Toronto?");

console.log(result.finalOutput);The SDK handles agent reasoning, tool calls, and conversation loops automatically.

6. Multi-Agent Systems (Handoffs)

const bookingAgent = new Agent({ name: "Booking", instructions: "..." });

const refundAgent = new Agent({ name: "Refund", instructions: "..." });

const masterAgent = new Agent({

name: "Master Agent",

instructions: "Delegate to booking or refund agents when needed.",

handoffs: [bookingAgent, refundAgent],

});This allows one agent to hand off a conversation to another based on context.

7. Guardrails and Safety

Guardrails validate input/output or prevent unsafe tool calls. Use them to ensure compliance, prevent misuse, and protect APIs.

8. Tracing and Observability

Every agent run is automatically traced and viewable in the OpenAI Dashboarhttps://www.youtube.com/watch?v=DuUL_OK-iKwd. You’ll see which tools were used, intermediate steps, and handoffs — perfect for debugging and optimization.

9. Choosing Models and Reasoning Effort

- Use reasoning models for multi-step logic or planning

- Use mini/nano models for faster, cheaper tasks

- Tune reasoning effort for cost-performance trade-offs

10. Evaluate and Improve

- Use Evals for performance benchmarking

- Refine your prompts and tool descriptions iteratively

- Test for safety, correctness, and edge cases

Example: Weather Agent (Full Demo)

import { Agent, run, tool } from "@openai/agents";

import { z } from "zod";

const getWeather = tool({

name: "get_weather",

description: "Get current weather for a given city",

parameters: z.object({ city: z.string() }),

async execute({ city }) {

return { city, weather: "Sunny, 25°C" };

},

});

const weatherAgent = new Agent({

name: "WeatherAgent",

instructions: "You are a weather assistant. Use get_weather when asked about weather.",

model: "gpt-4.1",

tools: [getWeather],

outputType: z.object({

city: z.string(),

weather: z.string(),

}),

});

async function main() {

const result = await run(weatherAgent, "What is the weather in Toronto?");

console.log("Final output:", result.finalOutput);

console.log("Trace:", result.trace);

}

main().catch(console.error);Best Practices

- Start with one simple tool and expand

- Use structured outputs (

zod,pydantic) - Enable guardrails early

- Inspect traces to debug tool calls

- Set max iterations to prevent infinite loops

- Monitor latency, cost, and reliability in production

Wrap Up

With OpenAI’s Agent Builder and Agents SDK, you can now create sophisticated AI agents that go beyond chat — they can take real action, use tools, call APIs, and collaborate with other agents.

Whether you’re automating workflows, building personal assistants, or developing enterprise AI systems, these tools give you production-ready building blocks for the next generation of intelligent applications.