TL;DW (Too Long; Didn’t Watch)

Anthropic CEO Dario Amodei joined Dwarkesh Patel for a high-stakes deep dive into the endgame of the AI exponential. Amodei predicts that by 2026 or 2027, we will reach a “country of geniuses in a data center”—AI systems capable of Nobel Prize-level intellectual work across all digital domains. While technical scaling remains remarkably smooth, Amodei warns that the real-world friction of economic diffusion and the ruinous financial risks of $100 billion training clusters are now the primary bottlenecks to total global transformation.

Key Takeaways

- The Big Blob Hypothesis: Intelligence is an emergent property of scaling compute, data, and broad distribution; specific algorithmic “cleverness” is often just a temporary workaround for lack of scale.

- AGI is a 2026-2027 Event: Amodei is 90% certain we reach genius-level AGI by 2035, with a strong “hunch” that the technical threshold for a “country of geniuses” arrives in the next 12-24 months.

- Software Engineering is the First Domino: Within 6-12 months, models will likely perform end-to-end software engineering tasks, shifting human engineers from “writers” to “editors” and strategic directors.

- The $100 Billion Gamble: AI labs are entering a “Cournot equilibrium” where massive capital requirements create a high barrier to entry. Being off by just one year in revenue growth projections can lead to company-wide bankruptcy.

- Economic Diffusion Lag: Even after AGI-level capabilities exist in the lab, real-world adoption (curing diseases, legal integration) will take years due to regulatory “jamming” and organizational change management.

Detailed Summary: Scaling, Risk, and the Post-Labor Economy

The Three Laws of Scaling

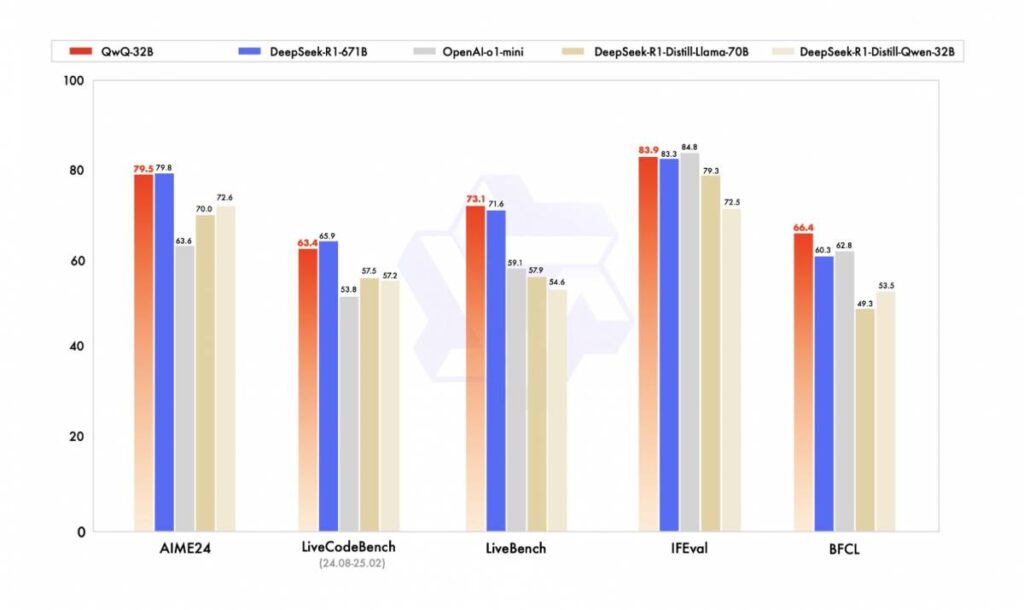

Amodei revisits his foundational “Big Blob of Compute” hypothesis, asserting that intelligence scales predictably when compute and data are scaled in proportion—a process he likens to a chemical reaction. He notes a shift from pure pre-training scaling to a new regime of Reinforcement Learning (RL) and Test-Time Scaling. These allow models to “think” longer at inference time, unlocking reasoning capabilities that pre-training alone could not achieve. Crucially, these new scaling laws appear just as smooth and predictable as the ones that preceded them.

The “Country of Geniuses” and the End of Code

A recurring theme is the imminent automation of software engineering. Amodei predicts that AI will soon handle end-to-end SWE tasks, including setting technical direction and managing environments. He argues that because AI can ingest a million-line codebase into its context window in seconds, it bypasses the months of “on-the-job” learning required by human engineers. This “country of geniuses” will operate at 10-100x human speed, potentially compressing a century of biological and technical progress into a single decade—a concept he calls the “Compressed 21st Century.”

Financial Models and Ruinous Risk

The economics of building the first AGI are terrifying. Anthropic’s revenue has scaled 10x annually (zero to $10 billion in three years), but labs are trapped in a cycle of spending every dollar on the next, larger cluster. Amodei explains that building a $100 billion data center requires a 2-year lead time; if demand growth slows from 10x to 5x during that window, the lab collapses. This financial pressure forces a “soft takeoff” where labs must remain profitable on current models to fund the next leap.

Governance and the Authoritarian Threat

Amodei expresses deep concern over “offense-dominant” AI, where a single misaligned model could cause catastrophic damage. He advocates for “AI Constitutions”—teaching models principles like “honesty” and “harm avoidance” rather than rigid rules—to allow for better generalization. Geopolitically, he supports aggressive chip export controls, arguing that democratic nations must hold the “stronger hand” during the inevitable post-AI world order negotiations to prevent a global “totalitarian nightmare.”

Final Thoughts: The Intelligence Overhang

The most chilling takeaway from this interview is the concept of the Intelligence Overhang: the gap between what AI can do in a lab and what the economy is prepared to absorb. Amodei suggests that while the “silicon geniuses” will arrive shortly, our institutions—the FDA, the legal system, and corporate procurement—are “jammed.” We are heading into a world of radical “biological freedom” and the potential cure for most diseases, yet we may be stuck in a decade-long regulatory bottleneck while the “country of geniuses” sits idle in their data centers. The winner of the next era won’t just be the lab with the most FLOPs, but the society that can most rapidly retool its institutions to survive its own technological adolescence.

For more insights, visit Anthropic or check out the full transcript at Dwarkesh Patel’s Podcast.